Deploy a Model by Pre-packaged Server

In this tutorial, we will show how to deploy a model by a pre-packaged server. We deploy a IRIS model by SKLearn pre-packaged server.

Prerequisites

Enable Model Deployment in Group Management

Remember to enable model deployment in your group, contact your admin if it is not enabled yet.

Tutorial Steps

Go to User Portal and select

Deployments.Then we are in model deployment list page, now clicking on

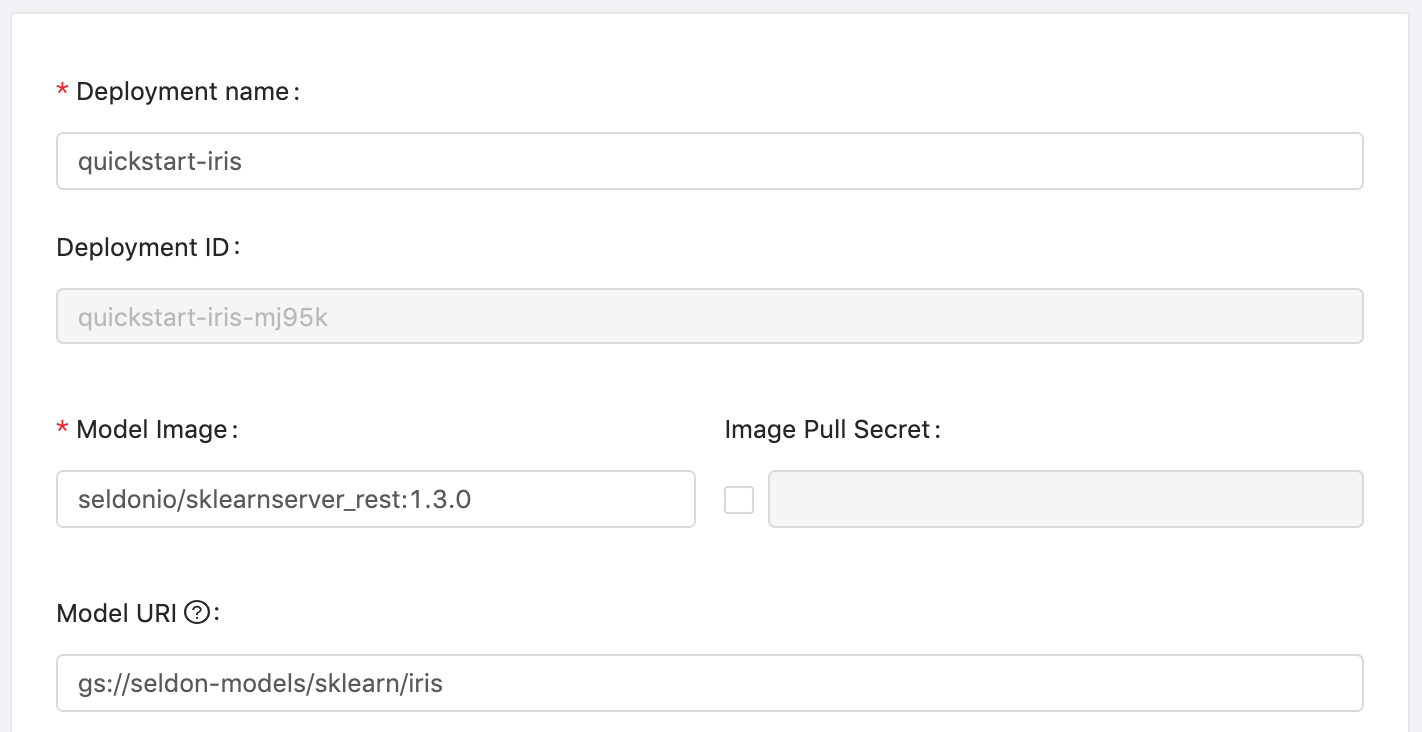

Create Deploymentbutton.Fill in the

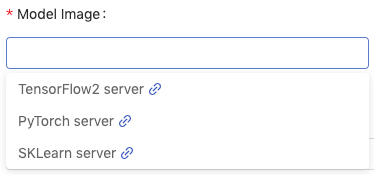

Deployment namefield withquickstart-irisSelect the

Model Imagefield withSKLearn server; This is a pre-packaged model server image that can servescikit-learnmodel.

Fill in the

Model URIfield withgs://seldon-models/sklearn/iris; This path is included the trained model in the Google Cloud Storage.

In the

Resources,- choose the instance type, here we use the one with configuration

(CPU: 0.5 / Memory: 1 G / GPU: 0) - leave

Replicasas default (1)

- choose the instance type, here we use the one with configuration

Click on

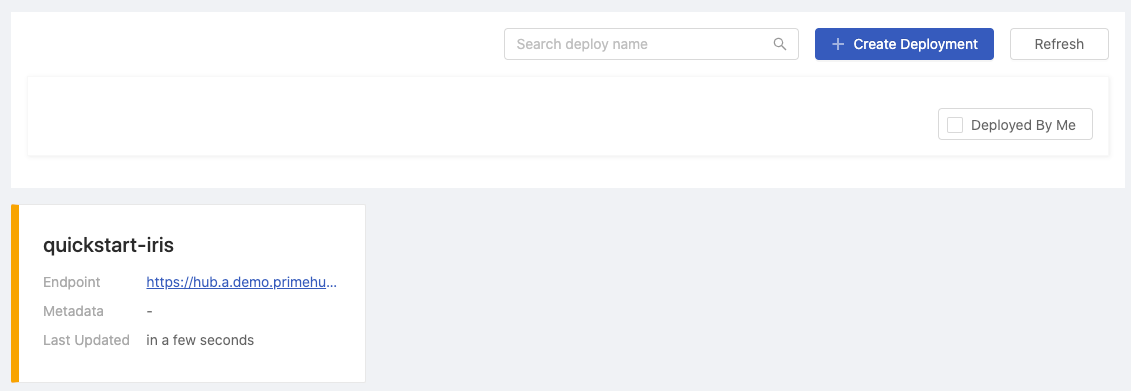

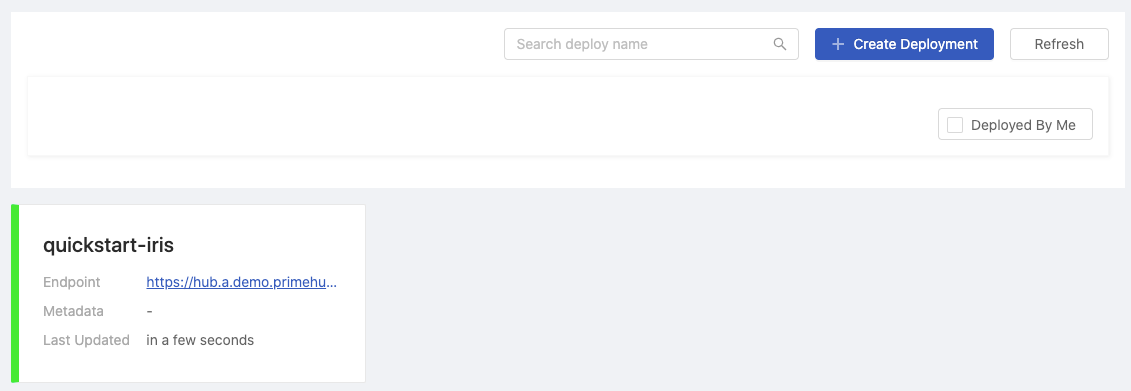

Deploybutton, then we will be redirected to model deployment list page. Wait for a while and click onRefreshbutton to check our model is deployed or not.

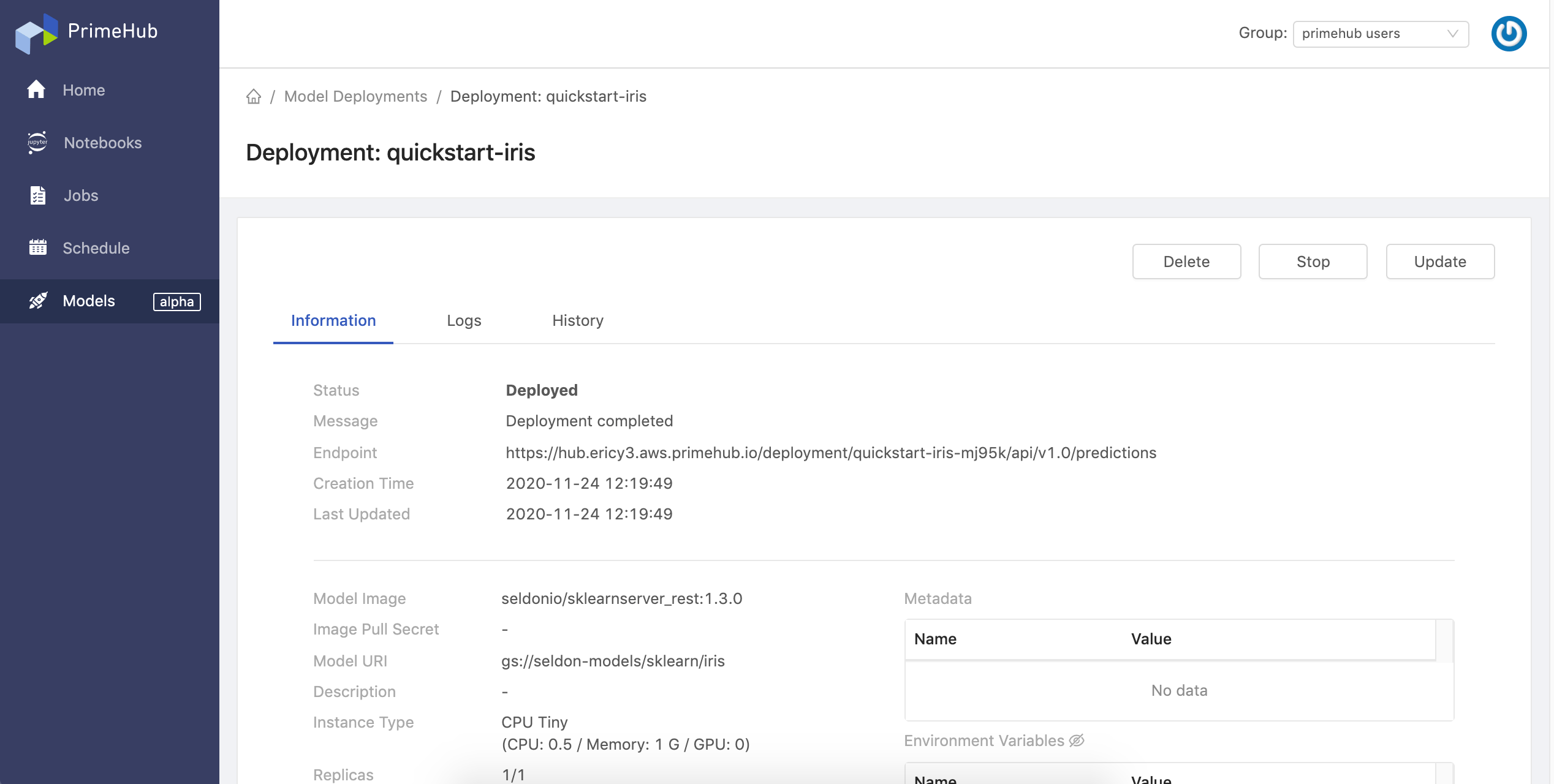

When the deployment is deployed successfully, we can click on cell to check its detail.

We can view some detailed information in detail page, now let's test our deployed model! Copy the

endpoint URLand replace the${YOUR_ENDPOINT_URL}in the following block.curl -X POST ${YOUR_ENDPOINT_URL} \ -H 'Content-Type: application/json' \ -d '{ "data": {"tensor": {"shape": [1, 4], "values": [5.3, 3.5, 1.4, 0.2]}} }'Then copy the entire block to the terminal for execution, and we are sending tensor as request data.

- Example of request data

curl -X POST https://hub.xxx.aws.primehub.io/deployment/quickstart-iris-xxx/api/v1.0/predictions \ -H 'Content-Type: application/json' \ -d '{ "data": {"tensor": {"shape": [1, 4], "values": [5.3, 3.5, 1.4, 0.2]}} }' - Example of response data (it predicts the species is

Iris setosaas the first index has the highest prediction value){ "data": { "names": [ "t:0", "t:1", "t:2" ], "tensor": { "shape": [ 1, 3 ], "values": [ 0.8700986370655746, 0.12989376988727133, 7.5930471540348975e-06 ] } }, "meta": {} }

- Congratulations! We have deployed a model as an endpoint service that can respond requests anytime from everywhere.

Reference

- For the completed model deployment feature introduction, see Model Deployment.

- For the customized pre-packaged server instruction, see Pre-packaged servers.